Market Making, Expected Value, and General Topics

June 28, 2020 | Updated July 6, 2020

Welcome to week two of my trading prep series! This week I’m going to focus most of my attention on learning and practicing market making, expected value, and other expanded topics. I’ll also post resources and links to additional problems as I go along for my documentation purposes. Thanks for tuning in!

Update: Instead of doing problems every day consecutively, I’ll be doing problems on weekdays and spending weekends reviewing problems and topics I’ve already covered.

Day Eight: June 29

A friend linked me to a blog post by Messy Matters on confidence intervals/overconfidence that you can find here. I’ve been spending some time with market making questions so I found the excercise and results of the “study” pretty interesting. I also found this powerpoint on recuiting tips when I was looking for new problems to do.

Problem 1. Make me a market on the temperature in an hour. Solution. I was thinking through this problem after taking a walk this morning. At the time, I estimated the temperature to be about (the actual temperature was ). Given it was , it’s still relatively early in the day and there’s quite a bit of room for the temperature to increase to before it plateaus in the afternoon. From my experience, the temperature in a typical summer day in San Francisco usually tops off around to . This morning was relatively cool and so I’d expect that today would be one of the cooler days, toping off between to . Then, just assuming that the temperature increases somewhat linearly, I’d set my bid at and offer at .

After setting my initial spread, I’d adjust my interval accordingly to how the second party/market makers chooses to buy from or sell to me. To do this, I sampled from a normal distribution meaned at with for the price and flipped a coin to determine whether I was being bought or sold to at that price.

- Bid at : buying from me at a value leaning so far to the right in my range indicates that I severely underestimated the temperature in an hour, as the willingness to buy higher might be an attempt to execute an unfavorable trade with me to then later sell at a higher price with an acceptable margin. However, I also know the temperature cannot change too much so I’d adjust my spread to account for this new information by shifting my position to . I widened my range a bit since I’m pretty skeptical that my original range reflected the actual temperature well and I want to make sure to cover all my bases.

- Offer at : A willingness to sell indicates that, taking into account my decision-making, the second party believes that optimizes both margins and chance of trade execution. I wouldn’t expect the exact temperature to be since not all buyers with information on the weather would want to buy the exact of the instrument as it’s harder to later sell for profit. I’d adjust my spread down to .

Unfortunately, this simulation is flawed as I didn’t really have a second party with better information on the specific product being exchanged. I tried to adjust for that by using the sampler and randomizing but since the real value of the temperature at wasn’t known to either me or my fictitious party, I had to operate under the assumption that my initial spread wasn’t completely wrong, which could have been the case. Next time, I’ll probably try this with a partner. The actual temperature at was about so you can see how the spread deviated from that without this information, but this exercise still helps me practice adjusting my spread to new information.

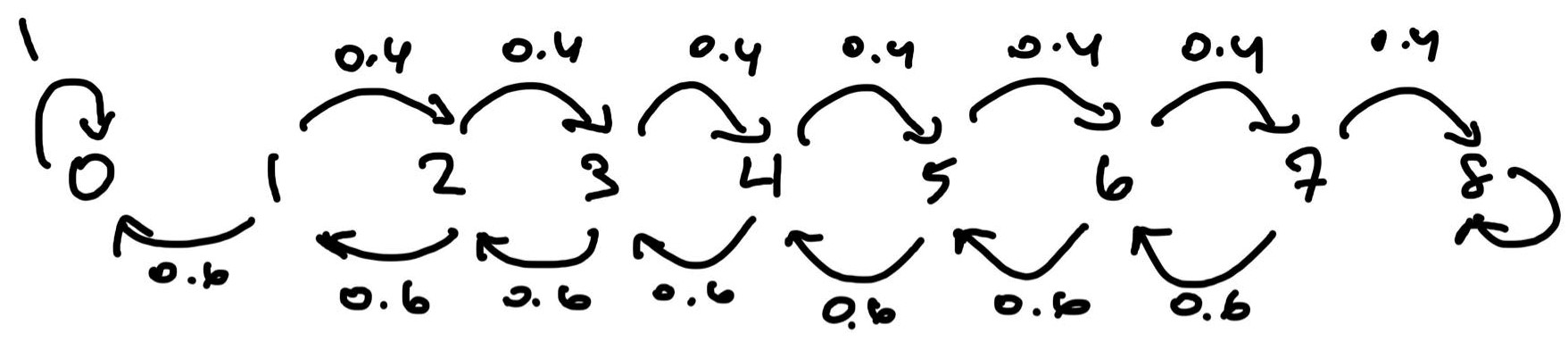

Problem 2. Smith is in jail and had dollars and can get out on bail if he has dollars. A guard agrees to make a series of bets with him, with Smith’s probability of winning and probability of losting . Find the probability that he can leave on bail before losing all his money if he bets every time versus if he bets as much as possible each round to get to dollars but nothing more than that. Which is the optimal strategy? Solution. This problem is from Aldous’s STAT 150 class and is a spinoff of the gambler’s ruin problem. If Smith bets one dollar at a time, the Markov chain that describes his money at each state follows:

Let , be the probability that Smith’s money reaches before when starting from state . Then we have

You can solve this extremely convoluted system of linear equations to find that . Alternately, the transition matrix for this Markov chain is:

Taking with sufficiently large gives us the steady state of this Markov chain, and gives us as well!

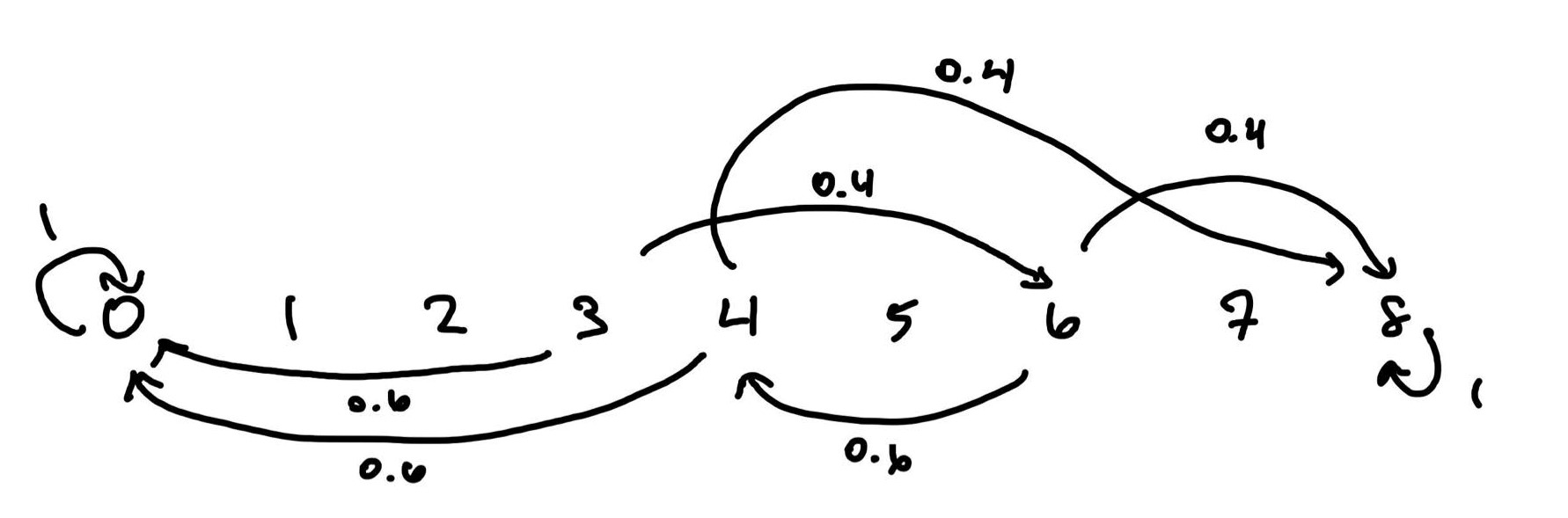

For the case that Smith bets as much as possible each round as needed to reach dollars, we have the Markov chain:

This in turn gives us the system of equations

Solving for this gives us . This is considerably higher than betting one dollar each time, so Smith should choose this strategy when betting.

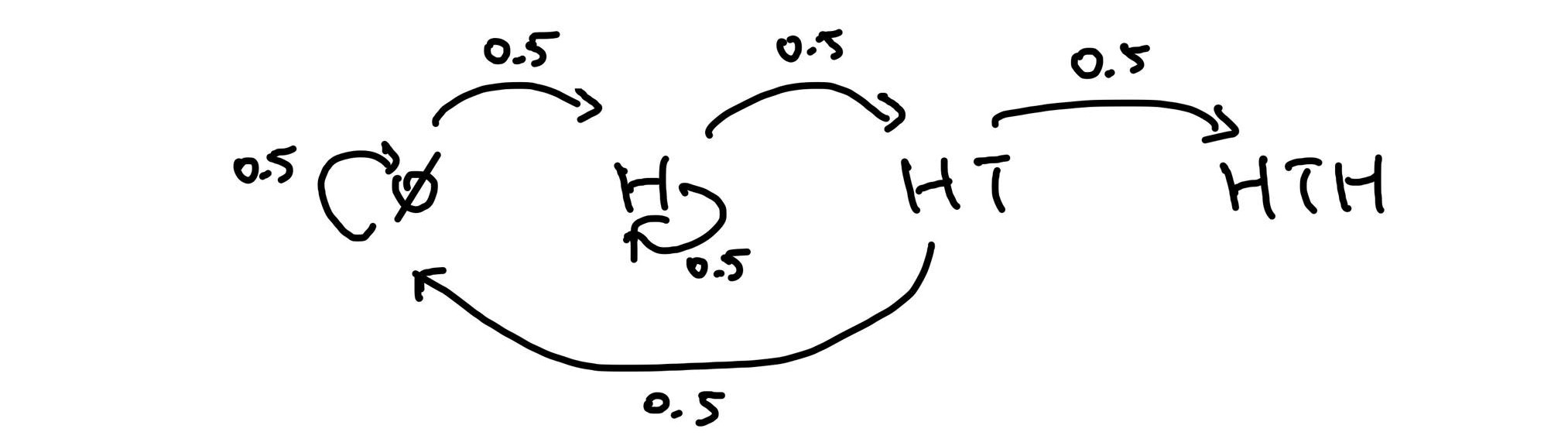

Problem 3. What is the expected number of tosses for a us to toss a ?

Solution. This is another Markov chain problem. Note that we start all the way over whenever we roll a tails before our first heads so let’s call this state . We can also account for all the other possible states: , , and . Assuming a fair coin we have the following Markov chain:

Let’s denote as the expected number of turns to get from each state . Then we have the following system of linear equations:

Solving these gives so we expect it to take 10 turns to flip our first .

Problem 4. Players and play a marble game. Each player has a red and a blue marble in a bag and draws one marble from their bag each round. If both marbles are blue wins and if both are red wins . If the two colors are different, wins . If you can play this game 100 times, who would you play as?

Solution. Since there is an equal chance of picking marbles with different colors as picking two marbles that are the same color, the expected value of playing player versus player is the same (EV ). Then, minimizing risk, we can calculate the variance of the payoff for each player:

’s payoff has larger variance and he holds more risk so it’s advantageous to play as player when risk-averse. However, since is relatively large, the actual payoff approaches the expected payoff of playing, minimizing the risk of playing as player .

Problem 5. You are trapped in a dark cave with three indistinguishable exits on the walls. One of the exits takes you 3 hours to travel and takes you outside. One of the other exits takes 1 hour to travel and the other takes 2 hours, but both drop you back in the original cave through the ceiling, which is unreachable from the floor of the cave. You have no way of marking which exits you have attempted. What is the expected time it takes for you to get outside? Solution. This is a simple EV problem. Since the doors are indistinguishable and we can’t makr our escape attempts, the probability of selecting each of the exists is identical and independent from the others. Then let be a random variable measuring the time it takes to make it out of the cave. There is chance we make it outside in time , chance we waste an hour and end up back in the cave, and chance we waste two hours and end up back in the cave. Then,

Solving this equation gives so we expect to spent hours attempting to escape before we’re finally outside.

Day Nine: June 30

For today’s problems I took a step back and reviewed some general problems and conditional probability.

Problem 1. A deck of cards has 3 Red and 3 Blue cards. At each stage, a card is selected at random. If it is Red, it is removed from the deck. If it is Blue then the card is not removed and we move to the next stage. Find the average number of steps till the process ends.

Solution. Note that the proces ends when there are no red cards left. To get started, I’ll draw the Markov chain for this problem:

Now let’s index the states: State , State , State , State . Let denote the expected time until the process ends. Then we have the following equations:

Solving this system gives .

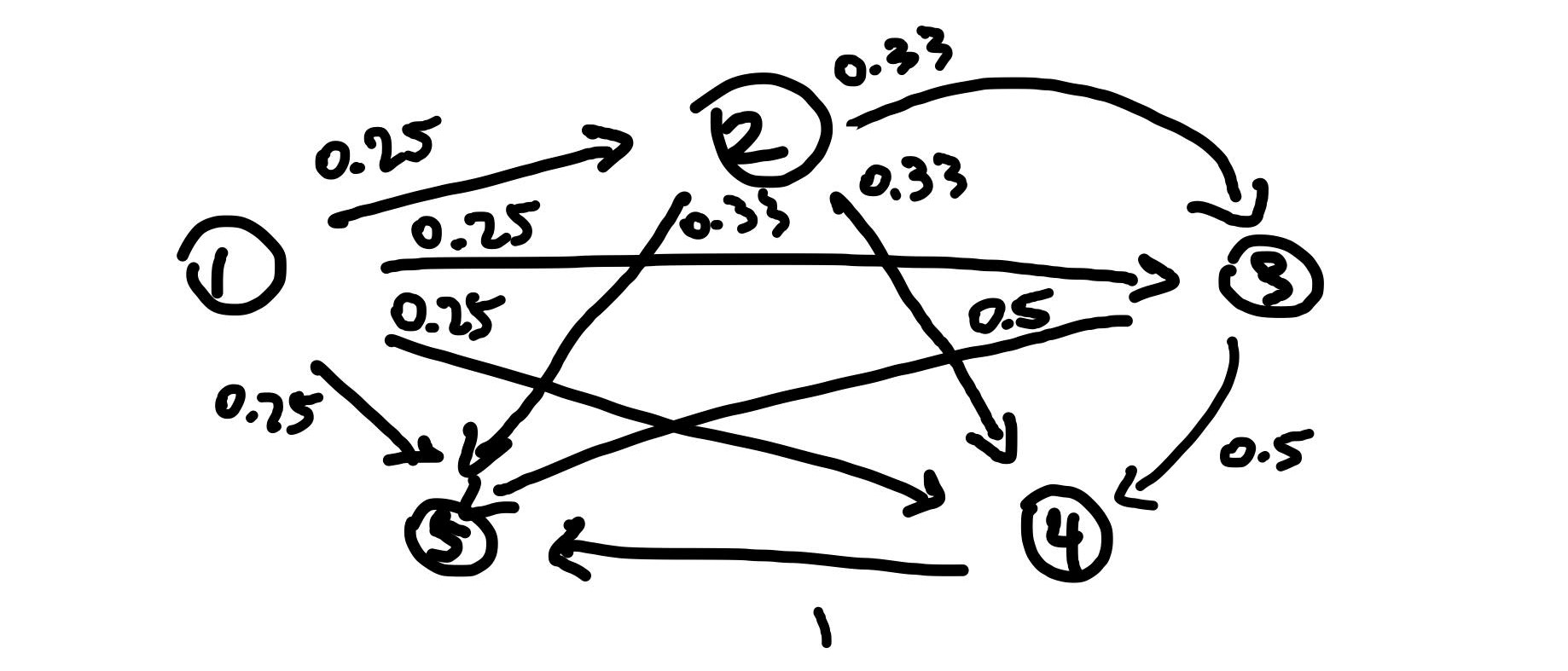

Problem 2. A process moves on the integers 1, 2, 3, 4, and 5. It starts at 1 and, on each successive step, moves to an integer greater than its present position, moving with equal probability to each of the remaining larger integers. State five is an absorbing state. Find the expected number of steps to reach state five. Solution. The Markov chain for this problem is

This gives us the transition matrix

We’re interested in finding the expected number of steps to reach state five from state one so let denote the expected number of steps to reach step from step . Then we have the equations

Solving this system of linear equations gives us the set of expected steps from each state so we expect about steps to reach .

Problem 3. An unfair coin has probability of tossing heads and of tossing tails. How do you make this coin fair? Solution. Note that and . The only way for us to make the coin fair is to somehow combine the outcomes as rolling consecutive heads vs tails has different probability of success. Note that is rolled with a probability of and is rolled with a probability of (note these are equal). Also has and has . We can modify the typical rules of coin flipping in in the following way to make it fair: Let heads be tossing an and tails be tossing , which both have equal probability and is fair. Then, to account for the remaining two possible outcomes, we just start over when we get or , which gives us an automatic reset when we toss two of the same face. Technically, would be rolling a , but note that so we can’t simply take the first occurance of or as a fair outcome is not guaranteed.

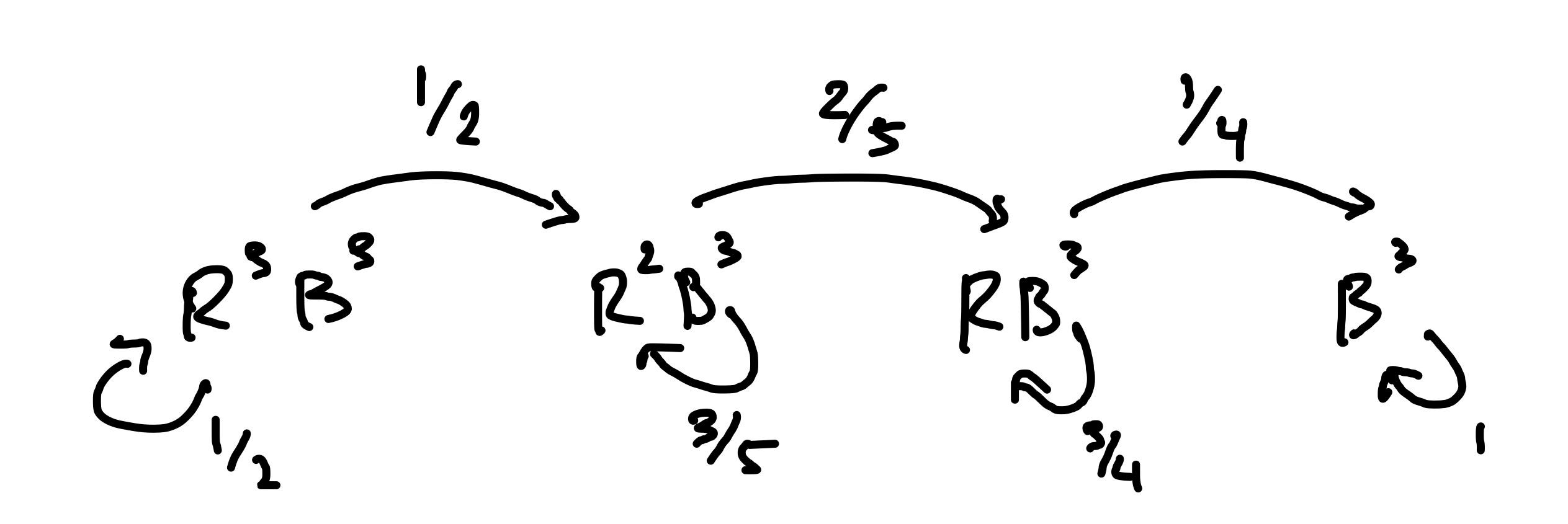

Problem 4. This is a variation of the gambler’s ruin problem. You have and want to reach . Your probability of success is and failure is . You may only place bets in multiples of . How would you maximize the probability of reaching ? Solution. I would bet each time I play. Note that from we can bet either or . We have of reaching from each of these bets, but chance of reaching if we bet dollars and chance of reaching if we bet dollars. Once we reach , we can no longer bet as all of our bets must be a multiple of . Then, if we bet , we have a total of probability to reach our goal. If we bet , then we have probability of getting to the first round. If this doesnt happen and we lose the first bet instead, then we’re at and we have the opportunity to go down to (probability ) in which we’re done and can’t bet anymore or go back up of and play over our original scenario over again. Clearly, betting per round is optimal as we have probability of winning the first round (matching the probability of winning when betting ) and there also is some additional probability that we can reach in later rounds.

Problem 5. Two players, and , toss a fair coin in that order. The sequence of heads and tails is recorded. If there is a sequence in the rolls, then whoever rolled the tails wins and the game ends. What is the probability that wins? Solution. Let and denote the probabilities that and win respectively. Note that . This next part is pretty cool—we can condition on ’s first coin toss, with has probability to roll and probability to roll . Then we have . If tosses heads, then essentially becomes the first to toss and so . If tosses heads however, we can then condition the probability further on ’s first toss. has probability of rollng , in which loses, and probability of tossing , which essentially brings us to the scenario that is the first person to toss an so would have probability of winning. Then we have

We can then plug this back into the original expresion to find that .

Day Ten: July 1

Come back later for the rest of today’s problems! My friend recommended me take a look at Fifty Challenging Problems in Probability, and I’m taking a couple of todays problems from here.

Problem 1. You play a game with an urn that contains clue balls red balls and yellow ball. For each red ball you draw you gain a dollar and if you draw the yellow ball you lose everything and the game ends. What is your strategy for this game given that you can decide to stop or redraw after each turn? Solution. Note the payoff for each blue ball draw is and the payoff for the yellow ball is where is the number of red ball already drawn. If we let be the number of blue balls already drawn, we can derive the marginal expected value (not sure if this is a term):

Our marginal should be greater than for us to keep playing. Then, setting the numerator to and solving gives us . This is the number of balls that have already been drawn, so after drawing balls our is still positive so we’ll draw a th one. Then we draw balls and call it a day (of course this will sometimes be cut short if we draw a yellow ball before then).

Problem 2. A drawer contains red socks and and black socks. When two socks are chose at random, the probability that both are red is . How small can the number of socks in the drawer be? Solution. Let and denote the number of red and black socks respecively. Since we are selecting socks without replacement, we have

Then,

Note that since . So if we choose and plug it into the quadratic equation to solve for , in which case we get . Then, the minimum number of socks in the drawer is .

Problem 3. Mr. Brown always bets a dollar on the number at roulette agains the advice of his friend. To help cure Mr. Brown of playing roulette , his friend bets Brown at even money that Mr. Brown will be behind at the end of plays. How is the cure working? Mr. Brown is piad of his bet amount in roulette when his number comes up and roulette has numbers to bet on. Solution. In order to go even in rounds, Mr. Brown needs only win one turn. So he’ll be behind if he loses all turns, which has probability , which is the probability that he loses his bet with his friend. Note also that his ev after 36 turns is

Then Mr. Brown’s EV of betting with his friend is

So this cure doesn’t seem to be working too well. Mr. Brown’s EV of eahc cycle of turns is whenever he makes the bet with his friend.

Problem 4. We throw three dice one by one. What is the probability that we roll three nunbers in strictly increasing order? Solution. Note that in order for the three numbers to be in strictly increasing order, they have to be unique. Then . We have probability . When conditioned on us rolling three numbers, the probability of rolling strictly increasing numbers is simply based on how we want them arranged. There is only one correct arragement, but there are ways to choose how to arrange the three numbers, so we have . Then we have

Then we have probability of rolling such an outcome.

Problem 5. The game of craps, played with two dice, is one of America’s fastest and most popular gambling games. These are the rules: we throw two dice. If we roll or on the first throw, we win immediately and if we roll or we lose immediatly. And other throw is called a “point”. If the first toss is a point, then we keep rolling until we roll our point, or roll a and lose. Wht is the probability of winning? I thought this was a Markov chain problem at first but a closer look made me realize it was just a bit of conditional probability. Note how I got the numbers: there are outcomes for two dice. There is one way for me to roll a , on way to roll a , abd ways to roll a . Then the probabiity of losing the first round is . Additionally, there are ways to roll a and ways to roll an so our probability of winning the first round is and there is a chance of rolling a point. Now, we just need to find the probability of winning conditioned on rolling a point and adding that to the probability of winning the first round to arrive at the total probability of winning. Note that there is probability that we roll or , that we roll a or a , and of rolling a or an .

Note that in the event that we roll a point in our first round, we need only consider the probabilities of rerolling the point (to win) or a (to lose) as rolling a nonpoint/seven will bring us back to our original scenario of wanting to roll the point. Then, there is probability of rolling a or , of rolling of a or , of rolling a or an (these can all be calculated by the number of ways to roll the point vs. the ). Then, the probability of winning conditional on rolling a point the first turn is

Adding this to , which is our probability of winning round , we have a total probability of winning , which is relatively fair for a casino game.

Day Eleven: July 2

Dynamic programming is also relatively fair game at some trading firms so I’ve included some dp questions for future problems.

Problem 1. A basketball player is taking free throws. She scores one point if the ball passes through the hoop and zero point if she misses. She has scored on her first throw and missed on her second. For each of the following throw the probability of her scoring is the fraction of throws she has made so far. For example, if she has scored points after the throw, the probability that she will score in the throw is . After throws (including the first and the second), what is the probability that she scores exactly baskets? Solution. This is another problem from A Practical Guide. I approached this problem with the mindset of Markov chains at first but then I realized that I could build it up iductively with conditional probability. Let’s define to be the event that the player scores baskets after shots and . Since we make the first shot and miss the second, the third shot has success probability (. For , we can condition it on and having taken place. Then,

The pattern seems to be emerging that for regardless of what is (obviously since we have missed the second shot), which we can prove inductively.

Base Case: is our base case

Inductive Step: Suppose that holds for all . Then for :

Then we have probability of making exactly shots out of .

Problem 2. Dynamic Programming: Given two arrays of numbers, find the length of the largest subsequence present in both arrays. For example the length of largest subsequence present in and is . Solution. Our goal is to build a method that returns the largest common subsequence (lcs). Let’s look at the brute force solution: we can approach the problem by finding each of the substrings in one of the arrays and seeing if it is present in the second array. There are combinations for substrings of length given an array of length . Then we have operations for our brute force solution, indicating time. Now let’s approach it with dynamic programming: assuming our first array has length and our second array has length , we can build an matrix in which the entry holds the size of largest common multiple of and .Then we have the following solution in C++:

int largestCommonSubsequence(int *array1, int *array2){

int m = array1.size();

int n = array2.size();

int lcs[m+1][n+1]; // our "matrix"

// constructs m by n matrix of lcs's using dp

for(int i = 0; i < m; i++){

for(int j = 0; j < n; j++){

if(i == 0 || j == 0){

lcs[i][j] = 0;

} else if (array1[i-1]= array2[j-1]){

lcs[i][j] = lcs[i - 1][j - 1] + 1;

} else{

lcs[i][j] = max(lcs[i-1][j], lcs[i][j-1]);

}

}

return lcs[m][n];

}

}Walking through the for loop: when either or is , at least one of the two subarrays we’re looking at are empty. Then, the lcs is just . Otherwise, if the previous number we looked at in the first array is the same as the previous number we looked at in the second array, then we simply need to add one to the value of our previous entry since we’ve increased the common subseqeunce by one number. Finally, if neither of these are the case, the lcs of the current matrix is the same as the max of the previous or matrix. This solution has an time, where and are the lengths of the two arrays.

Problem 3. ants are randomly put on a -foot string (independent uniform distribution for each ant between and ). Each ant randomly moves toward one end of the string (equal probability to the left or right) at constant speed of foot/minute until it falls off at one end of the string. Also assume that the size of the ant is infinitely small. When two ants collide head-on, they both immediately change directions and keep on moving at foot/min. What is the expected time for all ants to fall off the string? Solution. This problem is relatively simple when you start thinking about it. The most troublesome part seems to be the collision as each collision complicates the process and each any can collide with another at any time. Interesting collisions don’t seem to be relevant to solving this question. When an ant moving to the right collides with an ant moving to the left, the result is the two ants switching directions, but there is still one ant moving left and one ant moving right (at the same speed as well). So its’ as if collisions don’t really take place. Then, the expected time is just the max time for an ant to move across the string given a uniform distribution. This is identical to asking what the expected value of the maximum of i.i.d random variables with uniform distribution on , aka an order statistic problem. Lets find the CDF of , the maximum of the independently distributed ants.

Then, we can find the PDF by differentiating to get . The expected value of the max of the i.i.d random variables is

There is an alternate solution to this problem on the Berkeley EECS 126 site, where it’s listed as a test review problem.

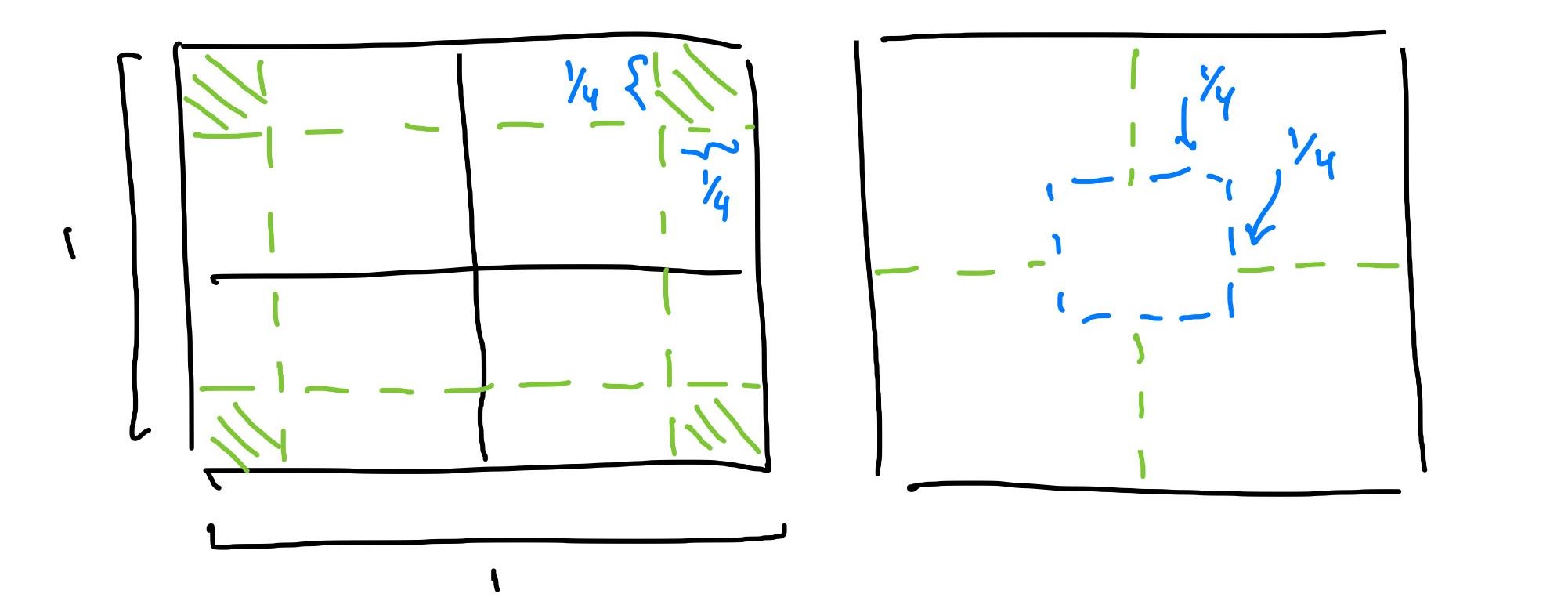

Problem 4. In a common carnival game a player tosses a penny from a distance of about five feet onto the surface of a table ruled in -inch squares. If the penny (diameter inch) falls entirely inside a square, the player received five cents but does not get his penny back and he loses his penny otherwise. If the penny lands on the table, what is the expected payoff of the game. Solution. We’re given that that the penny lands on the table so we know that the penny will fall on a square. Then we only need to find the regions where our penny can land on for it to be fully encapsulated. I’ve drawn two diagrams to model two different appraoches to this problem. We cant first visualize the penny landing on any four-square unit since any four adjacent squares are guaranteed to fully encompass a penny. Then, the part of the penny that associates with each corner must be squeezed into one of the four squares, which has a total area of and probability of being landed on.

Regarding the second visualization, this is much more accurate to the possible outcomes of the penny toss since we cannot have an edge of the penny touch a specific corner of the square. Then, we can visualize the locations that the center of the penny must reside in. The radius of the penny is so the center of the penny must reside at least inch from each side of the square, giving a smaller square of potential center point wuth area . Then, the expected value of the game is .

Problem 5. Eight eligible men and seven women happen randomly to have purchased single seats in a seat row of a theater. On average, how many pairds of adjacent seats, are ticketed for marriageable couples? Solution. The book that this problem is from is a bit old so the wording is a bit old-fashioned. Essentially we’re looking to find the average number of potential male-female couples who sit next to one another. The best case and worst case scenario follow

In order to build a marriageable couple we need to have or , or unlike adjacent seatings. If they are unlike, then we have marriageable couple and if not then we have . The probability of a marriageable couple in the first two seats is . Note that is also the expected number of marriageable couples in the first two seats. Our sam calculation can applies to any adjacent pair so we can get the average number of marriageable adjacent pairs by taking the number of adjacent pairs and multiplying by the expected value of each adjacent pair being marriageable: . Then the expected number of marriageable couples is just under half of the people present.

Moving on to a more general case, when we can elements of one type and of another randomly arranged in a line, the expected number of unlike adjacent elements is

which can be shown easily by going through the same process we used for our specific case.

Day Twelve: July 3

Come back later for today’s problems!

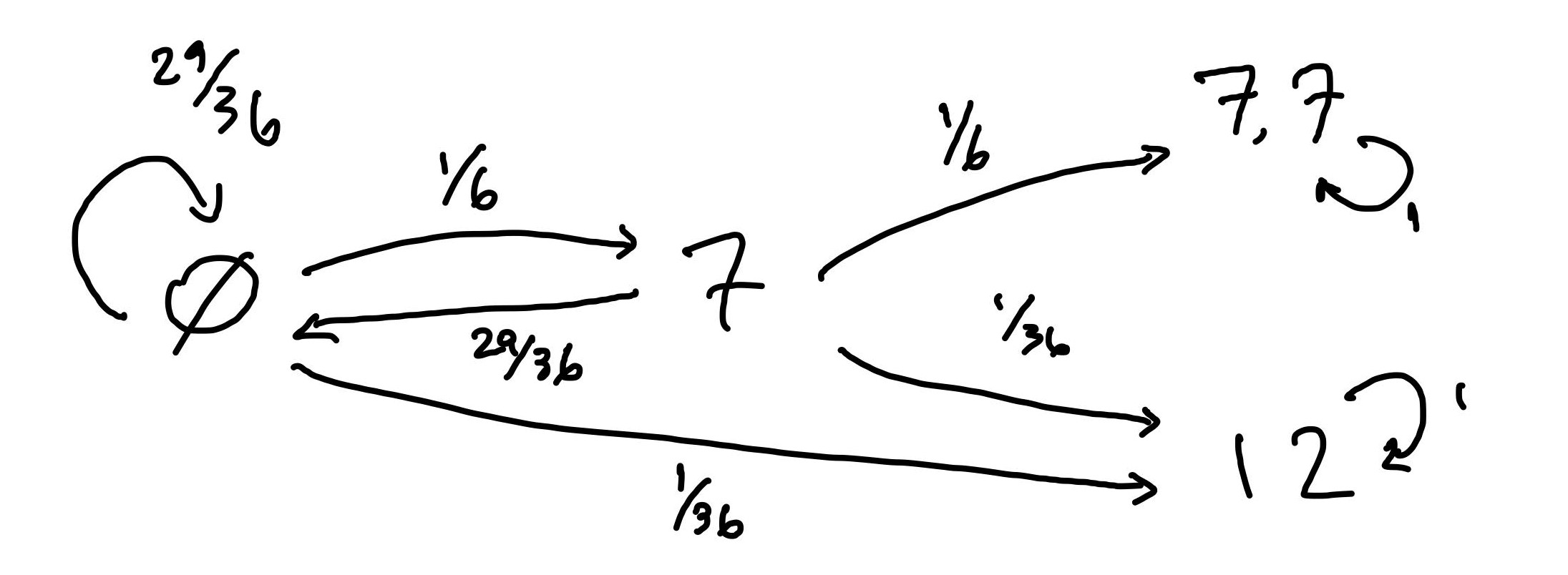

Problem 1. Two players bet on the number of rolls of the total of two standard six faced dice. Player bets tha a sum of will occur first and player bets that two consecutive ’s will be rolled before that. What is the probability that will win? Solution. This question can be solved either through conditional probability or a Markov chain approach. I’ve opted for a Markov chain since it’s much more straightforward and easily illustrated. Below is the Markov diagram for this problem. I’ve chosen to denote the event of rolling for the first time. Since every time we roll something other than a or a we essentially reset, each state gives us a certain probability of returning to our original state. It’s worth noting that after rolling the first there is still a nontrivial probability for to win as can still be rolled in the next round.

Then we have the following system of equations to solve:

Noting that and , solving gives us that . Then, player is slightly favored to win over player in their bet.

Problem 2. There are 51 ants on a square with side length of 1. If you have a glass with a radius of 1/7, can you put your glass at a position on the square to guarantee that the glass encompasses at least 3 ants? Solution. We can do this by creating sub-squares and using the pidgeonhole principle to conclude that ones of these sub-squares must have at least ants. In creating these sub-squares, we want them to cover the entirety of the original square so each of these smaller squares will have a side length. Note that a sub-square with side length has diagonal length . Meanwhile, the radius glass cup has a diameter of , so any sub-square can be inscribed within the glass. Then, we simply need to choose the sub-square with the most ants and place our glass carefully to cover at least three ants.

Problem 3. The probability you’ll see a falling star in the sky over the course of one hour is 0.44. What’s the probability you’ll see one over half an hour? Solution. This is a past Citadel interview question that I’ve seen variants of at other companies. We can assume that each half hour is independent from the other regarding falling star appearances. Then, the probability of not seeing a shooting star in either half-hour intervals is . Let be the probability that we see a shooting star in a thirty minute interval. Then we have

Solving gives us . This question is meant to be solved mentally so I didn’t include and pen or paper for it. For taking the square root of , I did a linear approximation based off of and .

Problem 4. You are given two ropes that when lit burn in one hour. Which one of the following time periods CANNOT be measured with your ropes? a) 50 min b) 30 min c) 25 min d) 35 min. Solution. The only time period I can’t think of a solution to is min. The key to this problem is realizing that we can burn the rope wherever we want. It’s easy to finding minutes by burning a single rope on both ends. We can burn by first measuring minutes. Then, we measure additional minutes by burning the second rope on both ends and additional times in the middle. This process will create two ropes, all of which burn on both of their ends. If any of these ropes burn out fully, all we need to do is light one of the other ropes in the middle. Keep doing this proccess and you’ll have an additional minutes measured. To measure minutes, we can burn one of the ropes on both ends and once in the middle. If one of the two ropes formed by the burning burns out, then light the other sub-rope in the middle, making sure the rope is still burning in places. This ensures that the first rope will finish burning in minutes. Then, we need only light the second rope on both ends and two times in the middle. This ensures the second rope burns at places, and so we’ll measure additional minutes that way.

Problem 5. You have three cards, each labeled , , , and you don’t know . All cards start facing down so you can’t see them. You flip one card. If you choose to “stay”, you get that card’s value. If you don’t “stay”, then you flip another card. Again, choose to “stay” (and keep the 2nd card’s value) or flip the final card and keep the final card’s value. Design the optimal strategy to maximize the value of the card that you choose and find the expectation of that value.

Solution. Note that your expected value will be at least . Making our decision on draw ensures we have no information and making our decision of draw presents us with no choice. Then it makes sense for us to make our decision on round . Since we have the ability to draw once again after round , our decision is simply to take what we drew or draw again. I will refer to each draw as . Then, if

we have the sequence so we choose to draw again for ev

we have the sequence so we stay for ev

we either go up () or down () so we choose to stay for ev

we either go up () or down () so we choose to draw again for ev

Using this strategy, we combine our total expected value:

My optimal strategy is to draw the first two cards and determine whether to draw again with the thought process above with expected value . Putting it more simply, if the second draw is higher than the first, then stay, and if not, then draw again.

Week Two Review:

This week I focused on working through more of A Practical Guide, and starting to pull general probability question from resources. i’ve done a bit of market-making practice behind the scenes and am still figuring out the best way of going about that. I didn’t get to review as many topics as I had hoped to and next week I hope to work on some more programming/staticstics questions as recruiting season begins.

Here are this week’s list of resources:

- A Practical Guide to Quantitative Finance Interviews (Ch 2, 4)

- Fifty Challenging Problems in Probability

- eFinancialCareers Past Interview Questions

- Confidence Intervals

- MIT Quant Finance Presentation

- Citadel Glassdoor Questions